Database

In order to develop an AI system that successfully identifies food groups based on food images taken in everyday scenarios, we require a large number of appropriately annotated data. We created such a dataset, consisting of images that were captured under free-living conditions. A group of expert academic dietitians identified 31 categories of food items which are relevant for MDA calculation in our previous study4.

A group of 10 non-expert annotators was recruited to annotate the individual food items and their serving sizes in each food image. We chose to use serving sizes because it would be easier for a non-expert in dietetics to approximate using body parts (e.g. handful) or household measures (e.g. cup). We used the serving sizes as provided by British Nutrition Foundation21. Table 1 shows the amount of images that were annotated by a specific number of annotators.

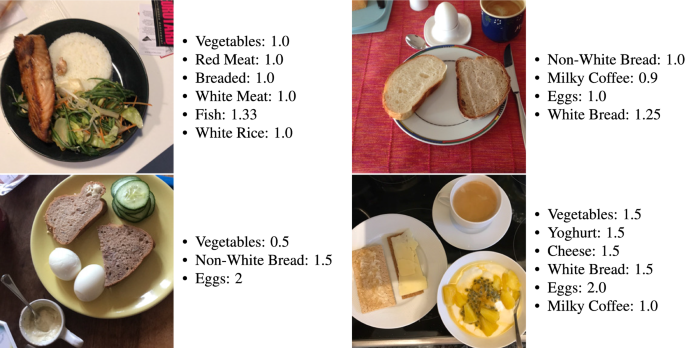

The annotators were provided with basic instructions, developed by experienced dietitians, for the annotation process on how to identify the 31 food categories and estimate the serving sizes. We collected a total of 11,024 images, along with their annotated labels and serving sizes. 9,888 of them have at least 4 annotators. Each image can contain a varying number of labels and the average number of labels for an image is 3.6. A subset of 293 images was taken as the testing set. An expert dietitian (i.e., with clinical experience, working for more than 5 years in the field) took over the refinement of the labels of the testing set, so that the system can be evaluated on a clean testing set. Some sample images from the training and the testing set can be seen in Fig. 1.

Inter annotator agreement metric

In this section, a new metric that quantifies the agreement of annotators both in terms of food recognition and serving size estimation is presented. Since the annotation was performed by non-expert annotators, the dataset contains natural label noise that we wanted to estimate. We used the Inter-Annotator Agreement (IAA) score for each of the 31 food categories, in order to quantify the degree of label noise in the dataset. However, since the labels of each image are not mutually exclusive (each image can contain more than one food item), IAA metrics such as Cohen’s and Fleiss’s kappa, which are frequently used in ML problems, could not be applied in our case. Moreover, we had to also consider the differences in the serving size estimations between the annotators. For these reasons we have rephrased the IAA score as follows: For every category c in each image, we calculate the normalized summation of the squared distances ((tilde{ssd_{c}})) between the estimated serving sizes by each annotator, and the IAA for a specific category as follows:

$$begin{aligned} tilde{ssd_{c}}= & {} left( dfrac{n(n-1)}{2}right) ^{-1}sum _{i=1}^{n}sum _{j=i+1}^{n} (p_{ic} – p_{jc})^2 end{aligned}$$

(1)

$$begin{aligned} IAA_{img,c}= & {} sqrt{(1-ssd_{c})} * dfrac{max (hat{n}, n-hat{n})}{n} end{aligned}$$

(2)

where (p_{ic}), (p_{jc}) are the serving size annotations of annotators i, j, respectively for food category c. The number of annotators that annotated the specific category is (hat{n}) and the total number of annotators is n (where (hat{n}le n)). (ssd_{c}) plays the role of disagreement between the annotators for food category c and the term ([dfrac{n(n-1)}{2}]^{-1}) normalizes its value between 0 and 1. The operator (max (cdot )) results in the number of annotators that annotated c, if they are more than the annotators that did not annotate it and vice versa. (IAA_{img,c}) is the IAA for the specific image for category c and (overrightarrow{IAA_{c}}) is a vector that contains the (IAA_{img,c}) for all images that include category c. The total IAA for all the images and all categories C is then defined as the weighted average for all categories, based on the times the category appears in the dataset ((N_{i})):

$$begin{aligned} Total_{IAA} = dfrac{sum _{i=1}^{C} N_{i} * mean(overrightarrow{IAA_{c}})}{sum _{i=1}^{C}N_{i}} end{aligned}$$

(3)

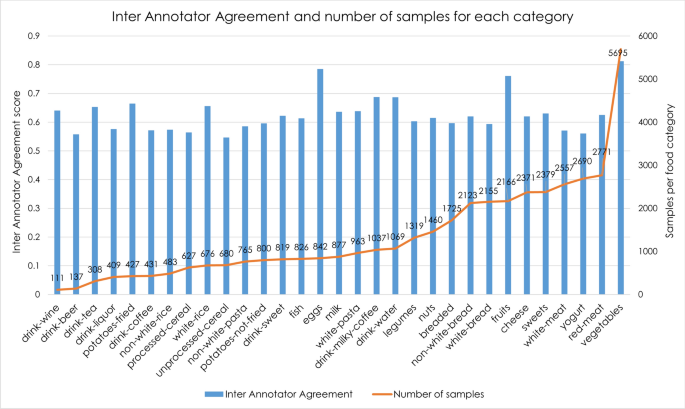

We calculated the IAA for each category, for the 9104 images annotated by 5 annotators (Fig. 2). In the figure, the categories are ranked in ascending order of the frequency of their samples in the training set. The (Total_{IAA}) for the entire annotated dataset is equal to 64.7%, demonstrating that the training dataset contains label noise.

Food recognition and serving size estimation

In this section, we initially present the network architecture that is used to perform food item recognition and serving size estimation. However, the presence of label noise in the training dataset can heavily deteriorate the results of the food recognition and serving size estimation tasks, since CNNs tend to “learn” from noisy labels and generalise poorly on a clean testing set22. Therefore, a proper method that can consider the label noise of the dataset is required. In23 and24 a noise-adaptation layer is appended to a neural network (NN) to learn the distribution between the noisy labels and the true, hidden ones. Other methods25,26 rely on a small, free-of-noise subset that can help with learning from noisy data. Moreover, there are methods that require the training of two NNs and each one separates the clean from the noisy samples that are going to be used from the other NN27,28. In this section, we also explain the noise-robust training procedure that is going to be used.

For the network architecture, we use the same architecture as described in our previous work4. We use (a) a CNN to extract features from images and (b) the pre-trained GloVe29 to extract word semantic features. A Graph CNN (GCNN)4,30 that uses these features and the correlation between the different food items is trained to recognize the food categories and their serving sizes.

For the training procedure, we adapt the methodology of27,31 for the multi-label problem. Specifically, two networks are trained simultaneously, and each model divides the dataset into a clean and a noisy subset to be used by the other model. Then, in order to counter the label noise, the samples are interpolated with each other based on32 so that the models learn to behave linearly between training samples.

Based on the work of DivideMix27, two NNs are initially trained for a few epochs (“warmup”). This way, two individual NNs can make predictions without overfitting to the label noise.

A Gaussian Mixture Model (GMM) is then fit on the per-sample loss of each network to divide the dataset into a clean set and a noisy-unlabeled set based on a fixed threshold. The two subsets will be used from the other network, to avoid error accumulation. At each epoch, there are two iterations where one model is being trained, while the other is being fixed. Initially, both the clean and the noisy sets are augmented by using random crops and horizontal flips on the images. For the noisy set, the labels are being replaced by the average of predictions from both networks on the augmentations as in (4), while for the clean set, the labels are refined based on their probability of being clean (5):

$$begin{aligned} Y_{noisy}= & {} dfrac{1}{2M}sum _{m}(p_{1}(U_{m}) + p_{2}(U_{m})) end{aligned}$$

(4)

$$begin{aligned} Y_{clean}= & {} w_{clean}y_{clean} + (1-w_{clean})dfrac{1}{M}sum _{m}p_{1}(L_{m}) end{aligned}$$

(5)

where M is the number of augmentations, (p_{1}) is the model to be trained, (p_{2}) is the fixed model, (U_{m}) and (L_{m}) are the noisy and the clean subsets respectively, (w_{clean}) are the probabilities of the labeled samples being clean, and (y_{clean}) are the original labels of the clean subset. (Y_{noisy}) and (Y_{clean}) refer to the final labels of the noisy ((X_{noisy})) and the clean ((X_{clean})) subset, for both the food category and the serving size estimation.

In the end, the data are further augmented27,31,32. Specifically, for each sample i from a batch b, the augmented image, the corresponding labels, and the serving sizes are mixed as follows:

$$begin{aligned} z’_{i,b}=lambda z_{i,b} + (1-lambda )z_{j,b} end{aligned}$$

(6)

where z is either the augmented image, the label, or the serving size, j is a random sample from the batch, and (lambda) is a random sample from the beta distribution ((lambda >0.5)). This way, the networks are trained to give linear predictions between samples, even if the labels are noisy.

The augmented input data are then fed into the network to be trained. Since there are two targets we are trying to optimize, we use a) the binary cross entropy loss for the mixed clean labels, b) the mean squared error loss for the mixed noisy labels and the serving sizes. In the beginning, the loss from the noisy set is discarded, as the models are not ready to predict the noisy labels, but gradually its weight is increased as the training procedure progresses.

The model with the best performance was integrated into the end-to-end automated MDA adherence system which automatically performs food recognition and serving estimation and outputs the MDA score on a weekly basis, along with suggestions for a healthier diet, closer to the MD.

We used the ResNet-101 model33, pre-trained on ImageNet34, as the feature extractor. We used the Adam optimizer with a learning rate of (10^{-4}) and a batch size of 32 for the ”warmup” stage for 5 epochs and a learning rate of (10^{-5}) and batch size of 12 for the remaining 10 epochs. We used a threshold of 0.5 for the output of the GMM to distinguish the clean from the noisy subset and (M = 2) augmentations for each input image. We also used a loss weight of 1 for the labels and 0.1 for the serving sizes throughout the procedure since the prediction of the food categories is more important.

Mediterranean diet adherence score

The weekly MDA score can be calculated based on food items that are consumed on an (a) meal, (b) daily, and (c) weekly basis3. A set of rules has been defined by expert dietitians4 and are being further refined here. Firstly, the 31 food categories that the network predicts must be clustered into 13 coarser categories, which share similar nutritional values, namely: vegetables, fruits, cereal, nuts, dairy products, alcoholic beverages, legumes, fish, white meat, red meat, eggs, sweets, and potatoes. Apart from these categories, we also consider olive oil, which plays a major role in the MD. While we use our automated food recognition system for the recognition of most of the food types, identifying the use of oil used in the preparation of a food is extremely challenging. Hence, we provide the option to the user to manually enter this category and use this for the weekly MDA scoring.

(a) Meal-based Score: Fruits, vegetables, and olive oil add plus points when they are consumed within any meal (breakfast, lunch, dinner, or snack), while cereal adds to the score only when consumed as a part of the main meals (breakfast, lunch, dinner). For each of these food categories, the scoring is summed for the whole day with a maximum scoring of 3/7 points per day (Supplementary Table 1).

(b) Daily-based Score: Nuts, dairy products, and alcoholic beverages are not related to meals, but give points if they are consumed throughout the day (Supplementary Table 2).

(c) Weekly-based Score: For the food categories that are counted on a weekly basis (legumes, fish, eggs, white meat, red meat, sweets, potatoes), the servings are summed up for the whole week to give the respective points (Supplementary Table 3).

The food categories that are scored on an (a) meal and (b) daily basis are summed for the whole week and added to the (c) weekly-based scoring to give the final weekly MDA score. The score lies from 0 (no adherence to MD) to 24 (highest adherence to MD).

We then adapt the score using (7). The (MDA_{0-100}) score is then normalized between 0% and 100% so it can be interpreted easier (Supplementary Fig. 1) and a small increase in the original score would be mapped to a higher increase in the (MDA_{0-100}) score, encouraging participants to follow a healthier diet.

$$begin{aligned} MDA_{0-100} = (ln (MDA_{0-24} +1))^2 end{aligned}$$

(7)

Smartphone application

The smartphone application consists of an interface which allows end-users to collect images of their daily meals and annotate them. Using the smartphone application, a user can capture a photo of a meal/food item. The user can also select the meal type and, optionally, choose the food categories that appear in the image, to be used for validation purposes (Supplementary Fig. 2). However, annotating olive oil in the image is crucial for the MDA scoring since it is not automatically recognized. While we highly encouraged the users participating in the study to take photos of their meals, they were provided with the option to also log only a textual description of their meals and annotate the MDA categories present in the meal. Once the users’ images are uploaded to the Oviva AG35 platform, an end-to-end system runs the food recognition and serving size estimation algorithms and applies the MDA rules to calculate the weekly MDA score for the patient. At the end of each week, the system sends out a detailed report to each user regarding their weekly MDA score. The report consists of four parts:

-

(1)

An MD Explainer which reminds the user the key points of the MD.

-

(2)

A colored percentage weekly score of their MDA (Supplementary Fig. 3).

-

(3)

A traffic light system regarding certain food categories important to the MD. If they were on track with a category, it was marked as Green, while categories, which needed further improvement were marked either in Yellow or Red (Supplementary Fig. 3).

-

(4)

Detailed recommendations on how to improve the MDA score for each food category. These recommendations are provided only for the categories, which had traffic lights displayed as Red or Yellow.

Feasibility study

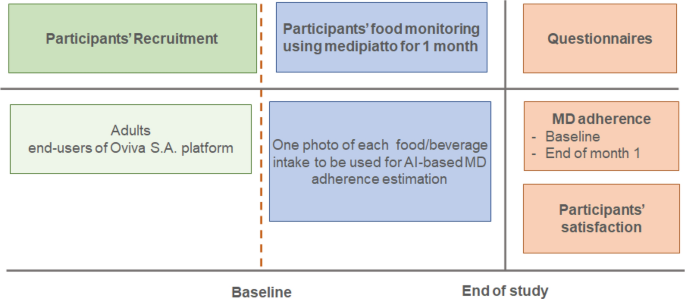

The outline of the feasibility study is shown in Fig. 3. The goal was to recruit at least 20 end-users of the Oviva AG platform (Body Mass Index (>27hbox { kg/m}^{2})). The study consisted of three stages: (i) the baseline, or the trial preparation stage, (ii) the duration of the trial, which involved the participants’ food monitoring using the medipiatto system for 1 month, and (iii) the end of the study, which involved the calculation of the self-reported MDA of the participants and the obtaining of answers to trial evaluation questionnaires that were handed out to both participants and the dietitians who recruited them.

During the baseline stage, the users were asked to fill out a 15-item validated food frequency questionnaire (FFQ) to assess their self-reported MDA score and collect information about their current food intake and dietary habits, based on a previous study9. The self-assessment questionnaire is a multiple-choice questionnaire with each question contributing points, to a total score of 30 (Supplementary Table 4). The participants also reported their sex, age, height, weight, highest level of educational attainment, current employment status, and nationality (demographics).

During the trial stage, the participants had to use the newly introduced system for a period of one month. They were asked to take photos of their food/beverage intakes using the mobile app and optionally, annotate the food categories. At the end of each week, the participants received their percentage MDA score, a traffic light color system that demonstrates their scores on 8 important to MD food categories, an explanatory sheet regarding the MD, and suggestions to improve their MDA score. The 8 food categories that we chose to present are fruits, vegetables, cereals, nuts, legumes, fish, red meat, and sweets, since a slight change in their consumption can be easily observed in the weekly MDA score.

Finally, at the end of the study, after a period of one month from the start, the participants were asked to fill out the same 15-item questionnaire to assess their self-reported MDA score, as well as a qualitative feedback questionnaire regarding their satisfaction with using the system. A qualitative feedback questionnaire was also administered to the dietitians treating the participants.

Statistical analysis

All analyses were performed using the SciPy library of Python. To measure statistical significance, we performed the paired t-test. Statistical significance was considered at the value of P<0.05.

Ethics approval and consent to participate

The study was reviewed and declared exempt from ethics review by the Cantonal Ethics Committee, Bern, Switzerland (KEK, Req-2021- 00225). All the participants were informed about the project and signed an informed-consent form. They had the option to drop out of the study at any time and have their data removed if they wanted to. All research was performed in accordance with relevant guidelines/regulations and the principles of the Helsinki Declaration.